Georgia, Year 13, explores the British retreat at Dunkirk and argues that Hitler’s greatest mistake was at this point in the war.

Dunkirk was the climactic moment of one of the greatest military disasters in history. From May 26 to June 4, 1940, an army of more than three hundred thousand British soldiers were essentially chased off the mainland of Europe, reduced to an exhausted mob clinging to a fleet of rescue boats while leaving almost all of their weapons and equipment behind for the Germans to pick up. The British Army was crippled for months, and had the Royal Air Force and Royal Navy failed, Germany would have managed to conduct their own D-Day, giving Hitler the keys to London. Yet Dunkirk was a miracle, and not due to any tactical brilliance from the British.

Dunkirk was the climactic moment of one of the greatest military disasters in history. From May 26 to June 4, 1940, an army of more than three hundred thousand British soldiers were essentially chased off the mainland of Europe, reduced to an exhausted mob clinging to a fleet of rescue boats while leaving almost all of their weapons and equipment behind for the Germans to pick up. The British Army was crippled for months, and had the Royal Air Force and Royal Navy failed, Germany would have managed to conduct their own D-Day, giving Hitler the keys to London. Yet Dunkirk was a miracle, and not due to any tactical brilliance from the British.

In May 1940, Hitler was on track to a decisive victory. The bulk of the Allied armies were trapped in pockets along the French and Belgian coasts, with the Germans on three sides and the English Channel behind. With a justified lack of faith in their allies, Britain began planning to evacuate from the Channel ports. Though the French would partly blame their defeat on British treachery, the British were right. With the French armies outmanoeuvred and disintegrating, France was doomed. And really, so was the British Expeditionary Force. There were three hundred thousand soldiers to evacuate through a moderate-sized port whose docks were being destroyed by bombs and shells from the Luftwaffe. Britain would be lucky to evacuate a tenth of its army before the German tanks arrived.

Yet this is when the ‘miracle’ occurred. But the miracle did not come in the form of an ally at all. Instead, it came from the leader of the Nazis himself. On May 24th, Hitler and his high command hit the stop button. Much to their dissatisfaction, Hitler’s tank generals halted their panzer columns which could have very easily sliced like scalpels straight to Dunkirk. The Nazi’s plan now was for the Luftwaffe to pulverise the defenders until the slower-moving German infantry divisions caught up to finish the job. It remains unclear why Hitler issued the order. It is possible that he was worried that the terrain was too muddy for tanks, or perhaps he feared a French counterattack. Hitler later claimed, at the end of the war, that he had allowed the British Expeditionary Force to get away simply as a gesture of goodwill and to try to encourage Prime Minister Winston Churchill to make an agreement with Germany that would allow it to continue its occupation of Europe. Whatever the reason, while the Germans dithered, the British moved with a speed that Britain would rarely display again for the rest of the war.

Not just the Royal Navy was mobilised. From British ports sailed yachts, fishing boats and rowing boats; anything that could sail was pressed into service.

Under air and artillery fire, the motley fleet evacuated 338,226 soldiers. As for Britain betraying its allies, 139,997 of those men were French soldiers, along with Belgians and Poles. Even so, the evacuation was incomplete. Some 40,000 troops were captured by the Germans. The Scotsmen of the 51st Highland Division, trapped deep inside France, were encircled and captured by the 7th Panzer Division commanded by Erwin Rommel. The British Expeditionary Force did save most of its men, but almost all its equipment—from tanks and trucks to rifles—was left behind.

In spite of this, the British would and could continue to view the evacuation of Dunkirk as a victory. Indeed, the successful evacuation gave Britain a lifeline to continue the war. In June 1940, neither America nor the Soviets were at war with the Axis powers. With France gone, Britain, and its Commonwealth partners stood alone. Had Britain capitulated to Hitler or signed a compromise peace that left the Nazis in control of Europe, many Americans would have been dismayed—but not surprised.

Hitler’s greatest mistake was giving the British public enduring hope, ruling out any chance of them suing for peace. He gave them an endurance that was rewarded five years later on May 8, 1945, when Nazi Germany surrendered. A British writer, whose father fought at Dunkirk wrote that the British public were under no illusions after the evacuation. “If there was a Dunkirk spirit, it was because people understood perfectly well the full significance of the defeat but, in a rather British way, saw no point in dwelling on it. We were now alone. We’d pull through in the end. But it might be a long grim wait…”

When someone says asylum in the context of psychology, what do you immediately think of? I can safely assume most readers are picturing haunted Victorian buildings, animalistic patients rocking in corners and scenes of general inhumanity and cruelty. However, asylum has another meaning in our culture. Asylum, when referring to refugees, can mean sanctuary, hope and care. Increasingly people are exploring this original concept of asylum, and whether we, in a time when mental illness is more prevalent than ever, can reclaim the asylum? Or is it, and institutional in general, confined to history?

When someone says asylum in the context of psychology, what do you immediately think of? I can safely assume most readers are picturing haunted Victorian buildings, animalistic patients rocking in corners and scenes of general inhumanity and cruelty. However, asylum has another meaning in our culture. Asylum, when referring to refugees, can mean sanctuary, hope and care. Increasingly people are exploring this original concept of asylum, and whether we, in a time when mental illness is more prevalent than ever, can reclaim the asylum? Or is it, and institutional in general, confined to history?

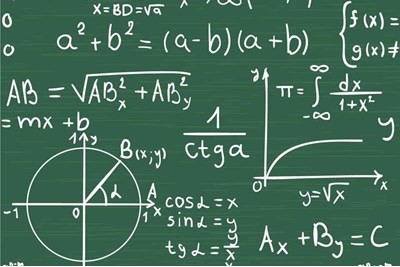

As the lessons progressed, I started enjoying this method of teaching as it allowed me to understand not only how each formula and rule had come to be, but also how to derive them and prove them myself – something which I find incredibly satisfying. I also particularly like the fact that a specific problem set will test me on many topics. This means that I am constantly practising every topic and so am less likely to forget it. Also, if I get stuck, I can easily move on to the next question.

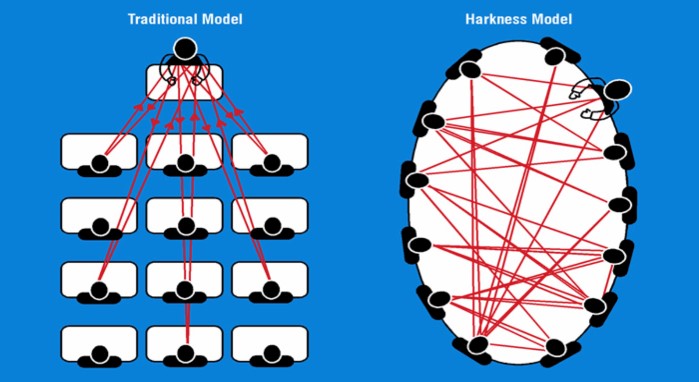

As the lessons progressed, I started enjoying this method of teaching as it allowed me to understand not only how each formula and rule had come to be, but also how to derive them and prove them myself – something which I find incredibly satisfying. I also particularly like the fact that a specific problem set will test me on many topics. This means that I am constantly practising every topic and so am less likely to forget it. Also, if I get stuck, I can easily move on to the next question. Moreover, I very much enjoyed seeing how other people completed the questions as they would often have other methods, which I found far easier than the way I had used. The other benefit of the lesson being in more like a discussion is that it has often felt like having multiple teachers as my fellow class member have all been able to explain the topics to me. I have found this very useful as I am in a small class of only five however, I certainly think that the method would not work as well in larger classes.

Moreover, I very much enjoyed seeing how other people completed the questions as they would often have other methods, which I found far easier than the way I had used. The other benefit of the lesson being in more like a discussion is that it has often felt like having multiple teachers as my fellow class member have all been able to explain the topics to me. I have found this very useful as I am in a small class of only five however, I certainly think that the method would not work as well in larger classes.

Both music and languages share the same building blocks as they are compositional. By this, I mean that they are both made of small parts that are meaningless alone but when combined can create something larger and meaningful.

Both music and languages share the same building blocks as they are compositional. By this, I mean that they are both made of small parts that are meaningless alone but when combined can create something larger and meaningful.