Anna (Year 13) looks back to our earliest beginnings as a civilisation in the Indo-European world, discovering that there is only one route to the reconstruction of Indo-European culture that offers any hope of reliability and that is language.

Swedish, Ukrainian, Punjabi, and Italian. To many of us, these languages are as different and distinct as they come. But it has been discovered that, in the same way that dogs, sheep and pandas have a common ancestor, languages can also be traced back to a common tongue. Thus, Dutch is not merely a bizarrely misspelled version of English and there is more to it than our languages simply being pervaded by the process of Latin words being imported into native dialects in the Middle Ages.

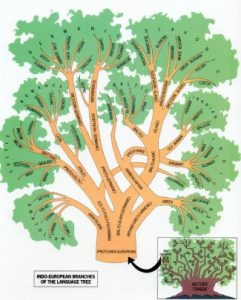

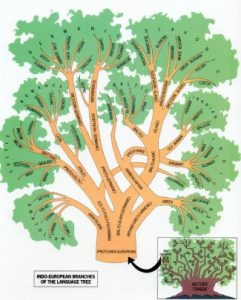

In the twelfth century, an Icelandic scholar concluded that Englishmen and Icelanders ‘are of one tongue, even though one of the two [tongues] has been changed greatly, or both somewhat.’ He went on to say that the two languages had ‘previously parted or branched off from one and the same tongue’. Thus, he noticed the common genetic inheritance of our languages, and coined the model of a tree of related languages which later came to dominate how we look at the evolution of the Indo-European languages. We call this ancestral language Proto-Indo-European, a language spoken by the ancestors of much of Europe and Asia between approximately 4,500 and 2,500 B.C.

The Indo European Family Tree

But what actually is it? Well, let me start simply. Consider the following words: pedis, ποδος (pronounced ‘podos’), pada, foot. They all mean the same thing (foot) In Latin, Ancient Greek, Sanskrit and English respectively. You will notice, I hope, the remarkable similarity between the first three words. English, on the other hand, sticks out slightly. Yet, it has exactly the same root as the other three. If I were to go back to one of the earliest forms of Germanic English, Gothic, you may perhaps notice a closer similarity: fotus. Over time, a pattern emerges: it is evident that the letter p correlates to an f and a letter d to a t. This is just one example of many: it is these sound laws that led Jacob Grimm to develop his law.

Grimm’s law is a set of statements named after Jacob Grimm which points out the prominent correlations between the Germanic and other Indo-European languages. Certainly, single words may be borrowed from a language (like the use of the words cliché, from the French, or magnum opus, from Latin), but it is extremely unlikely that an entire grammatical system would be. Therefore, the similarities between modern Indo-European languages can be explained as a result of a single ancestral language devolving into its various daughter languages. And although we can never know what it looked like, we can know what it sounded like. This is because, using Grimm’s Law, we can construct an entire language, not only individual words, but also sentences and even stories.

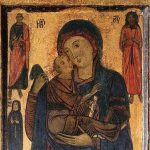

In 1868, German linguist August Schleicher used reconstructed Proto-Indo-European vocabulary to create a fable in order to hear some approximation of PIE. Called “The Sheep and the Horses”, the short parable tells the story of a shorn sheep who encounters a group of unpleasant horses. As linguists have continued to discover more about PIE, this sonic experiment continues, and the fable is periodically updated to reflect the most current understanding of how this extinct language would have sounded when it was spoken some 6,000 years ago. Since there is considerable disagreement among scholars about PIE, no single version can be considered definitive: Andrew Byrd, a University of Kentucky linguist, joked that the only way we could know for sure what it sounded like is if we had a time machine.

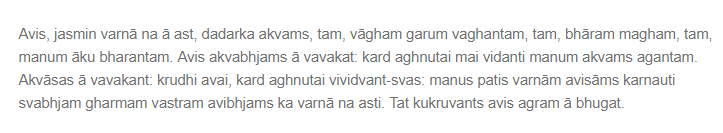

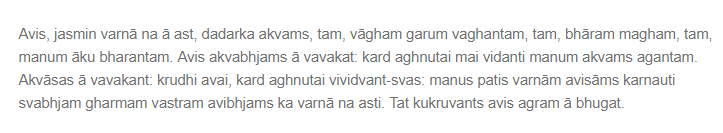

The earliest version read as follows:

(The audio of a later version, read by Andrew Byrd can be found at the following link: https://soundcloud.com/archaeologymag/sheep-and-horses)

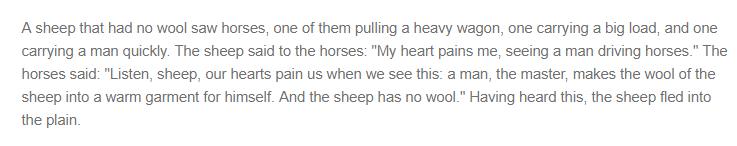

Here is the fable in English translation:

Though seemingly nonsensical, it is definitely exciting, and when you take a metaphorical microscope to it, you can notice similarities in words and grammar, particularly that of Latin and Ancient Greek. What is the point, though, in reconstructing a language no longer spoken?

Firstly, the world wouldn’t be what it is today had it not been for the Indo-Europeans. If you’re reading this article, chances are that your first language is an Indo-European language, and it’s also very likely that all of the languages you speak are Indo-European languages. Given how powerfully language shapes the range of thoughts available for us to think, this fact exerts no small influence on our outlook on life and therefore, by extension, on our actions.

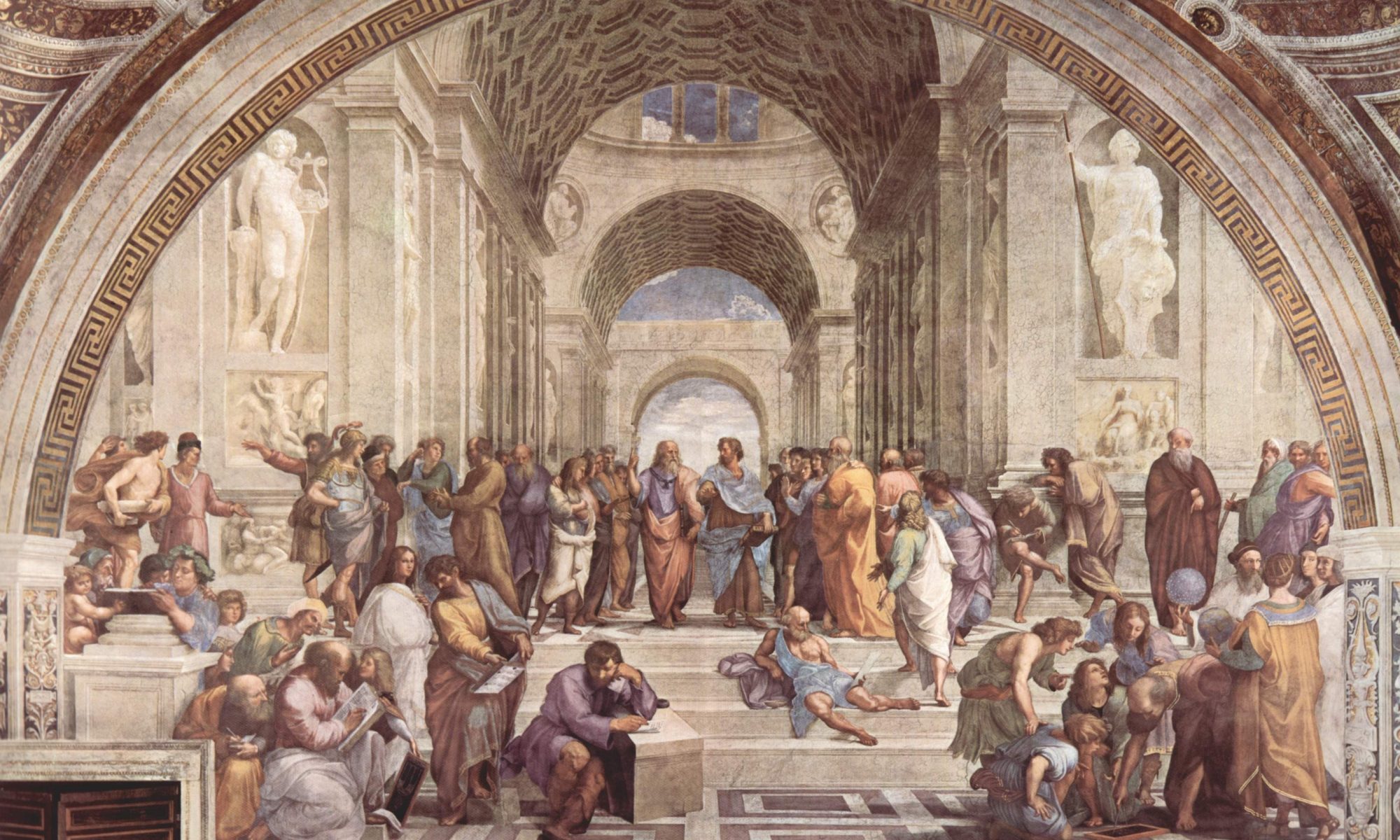

Secondly though, as a society, we are fascinated by our history, perhaps because examining our roots (to continue the tree metaphor) can help us understand where we may be headed. Although many archaeologists are hesitant to trust linguistic data, by gaining an insight into the language of the PIE world, we can make inferences about their culture and in turn learn more about our own. One such example of this is Hartwick College archaeologist David Anthony’s discovery of a mass of sacrificed dog and wolf bones in the Russian steppes. By consulting historical linguistics and ancient literary traditions to better understand the archaeological record, he and his team found that historical linguists and mythologists have long linked dog sacrifice to an important ancient Indo-European tradition, the roving youthful war band (known as a ‘koryos’ in reconstructed PIE). This tradition, which involved young men becoming warriors in a winter sacrificial ceremony, could help explain why Indo-European languages spread so successfully. Previous generations of scholars imagined hordes of Indo-Europeans on chariots spreading their languages across Europe and Asia by the point of the sword. But Anthony thinks Indo-European spread instead by way of widespread imitation of Indo-European customs, which included, for example, feasting to establish strong social networks. The koryos could have simply been one more feature of Indo-European life that other people admired and adopted, along with the languages themselves. We can learn about the customs of our prehistoric ancestors and so Indo-European studies is relevant because as powerfully as it has influenced our modern social structure and thought, there are also many ways in which the Indo-European worldview is strikingly different from our own. Studying it enables you to have that many more perspectives to draw from in creating your own worldview.

National Historical Museum Stockholm: A bronze Viking plate from the 6th century A.D. depicts a helmeted figure who may be the god Odin dancing with a warrior wearing a wolf mask.

However, some people think that cities face a choice of building up or building out. Asserting that there’s nothing wrong with a tall building if it gives back more than it receives from the city. An example of a building succeeding to achieve this is the £435 million Shard, which massively attracted redevelopment to the London Bridge area. So, is this a way for London to meet rising demand to accommodate growing numbers of residents and workers?

However, some people think that cities face a choice of building up or building out. Asserting that there’s nothing wrong with a tall building if it gives back more than it receives from the city. An example of a building succeeding to achieve this is the £435 million Shard, which massively attracted redevelopment to the London Bridge area. So, is this a way for London to meet rising demand to accommodate growing numbers of residents and workers?

Dunkirk was the climactic moment of one of the greatest military disasters in history. From May 26 to June 4, 1940, an army of more than three hundred thousand British soldiers were essentially chased off the mainland of Europe, reduced to an exhausted mob clinging to a fleet of rescue boats while leaving almost all of their weapons and equipment behind for the Germans to pick up. The British Army was crippled for months, and had the Royal Air Force and Royal Navy failed, Germany would have managed to conduct their own D-Day, giving Hitler the keys to London. Yet Dunkirk was a miracle, and not due to any tactical brilliance from the British.

Dunkirk was the climactic moment of one of the greatest military disasters in history. From May 26 to June 4, 1940, an army of more than three hundred thousand British soldiers were essentially chased off the mainland of Europe, reduced to an exhausted mob clinging to a fleet of rescue boats while leaving almost all of their weapons and equipment behind for the Germans to pick up. The British Army was crippled for months, and had the Royal Air Force and Royal Navy failed, Germany would have managed to conduct their own D-Day, giving Hitler the keys to London. Yet Dunkirk was a miracle, and not due to any tactical brilliance from the British. But while PEE in theory offers a sound approach to structuring extended writing in history, it has been criticised for unintentionally removing important steps in historical thinking. Fordham, for example, noticed that the use of such devices in his practice meant that there was too much ‘emphasis on structured exposition [which] had rendered the deeper historical thinking inaccessible’ (Fordham, 2007.) Pate and Evans similarly argued that ‘historical writing is about more than structure and style; the construction of history is about the individual’s reaction to the past’ (Pate and Evans, 2007). Therefore, too much emphasis on the construction of the essay rather than the nuances of an argument or an engagement with other arguments, as Fordham argues, can create superficial success. Further problems were identified by Foster and Gadd (2013), who theorised that generic writing frame approaches such as the PEE tool was having a detrimental effect on pupils’ understanding and deployment of historical evidence in their history writing.

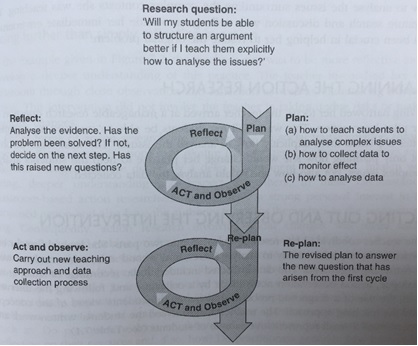

But while PEE in theory offers a sound approach to structuring extended writing in history, it has been criticised for unintentionally removing important steps in historical thinking. Fordham, for example, noticed that the use of such devices in his practice meant that there was too much ‘emphasis on structured exposition [which] had rendered the deeper historical thinking inaccessible’ (Fordham, 2007.) Pate and Evans similarly argued that ‘historical writing is about more than structure and style; the construction of history is about the individual’s reaction to the past’ (Pate and Evans, 2007). Therefore, too much emphasis on the construction of the essay rather than the nuances of an argument or an engagement with other arguments, as Fordham argues, can create superficial success. Further problems were identified by Foster and Gadd (2013), who theorised that generic writing frame approaches such as the PEE tool was having a detrimental effect on pupils’ understanding and deployment of historical evidence in their history writing. Thus far, the comparisons have allowed us to make some tentative observations. Whilst these do not seem to show an established pattern yet, there does seem to be a greater sense of originality and creativity in some of the non-PEE responses. Pupils seemed to produce more free-flowing ideas and were making more spontaneous links between those ideas, showing a higher quality of thinking. In addition, a few of the participating teachers noticed that their questioning became more tailored to developing the ideas and thinking of the pupils they taught rather than getting them to write something particular. However, others noticed that pupils were already well versed in PEE and so the change in approach may have had less of an effect. Other pupils seemed to feel less secure with a freeform structure. In order to encourage the more positive effects, our next cycle of teaching will experiment with different ways of planning essays that provide pupils with a way of organising ideas more visually and focus on the development of our questioning to further develop the higher quality thinking we noticed with some classes.

Thus far, the comparisons have allowed us to make some tentative observations. Whilst these do not seem to show an established pattern yet, there does seem to be a greater sense of originality and creativity in some of the non-PEE responses. Pupils seemed to produce more free-flowing ideas and were making more spontaneous links between those ideas, showing a higher quality of thinking. In addition, a few of the participating teachers noticed that their questioning became more tailored to developing the ideas and thinking of the pupils they taught rather than getting them to write something particular. However, others noticed that pupils were already well versed in PEE and so the change in approach may have had less of an effect. Other pupils seemed to feel less secure with a freeform structure. In order to encourage the more positive effects, our next cycle of teaching will experiment with different ways of planning essays that provide pupils with a way of organising ideas more visually and focus on the development of our questioning to further develop the higher quality thinking we noticed with some classes.