Louisa (Y13 Music Rep) investigates whether Classical Music is still relevant to young people of today and what can be gained from listening to it.

Classical music, once at the forefront of popular culture and entertainment, is nowadays often seen as a dying art. Frequently, classical music, an umbrella term for music spanning the baroque, classical and romantic eras, is described as an ‘elitist form of artistic expression’ that is only enjoyed by the old, the white and the rich. Its place as leading form of musical entertainment has been taken by modern genres such as pop, rock and rap that generally do not share the musical complexity of much of classical and romantic music that used to dominate concert halls.

It is clear that the interest and enjoyment of classical music has decreased over the years, most prominently in today’s youth, despite the increasing accessibility through platforms such as Spotify and YouTube. However, just because interest has lowered does not mean that “classical music is irrelevant to today’s youth” as radio 1 DJ ‘Kissy Sell Out’ publicly argued[1]. It is important that those with a platform in the music industry challenge the notion that classical music is only for a select group of elites as there is so much to be gained from being engaged with classical music, from education to in media to understanding successful music in the modern world.

It is clear that the interest and enjoyment of classical music has decreased over the years, most prominently in today’s youth, despite the increasing accessibility through platforms such as Spotify and YouTube. However, just because interest has lowered does not mean that “classical music is irrelevant to today’s youth” as radio 1 DJ ‘Kissy Sell Out’ publicly argued[1]. It is important that those with a platform in the music industry challenge the notion that classical music is only for a select group of elites as there is so much to be gained from being engaged with classical music, from education to in media to understanding successful music in the modern world.

An area in which classical music is of utmost importance is in education. Headlines such as “listening to an hour of Mozart a day can make your baby smarter” outlining the so called ‘Mozart effect’ frequently dominate the press. This longstanding myth of listening to Mozart as an infant correlating to intelligence has since been debunked as having little scientific merit.

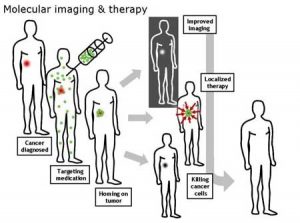

Above: DJs including William Orbit and DJ Tiesto have famously remixed classical music, including Samuel Barber’s Adagio for Strings. Does this make the original more relevant?

However, there is evidence behind the notion that classical music has a positive effect on brain development and wellbeing. A study undertaken in 2014 by Zuk, Benjamin, and Kenyon found that adults and children with musical training exhibited cognitive advantages over their non-musically-trained counterparts. Adults with prior musical training performed better on tests of cognitive flexibility, working memory, and verbal fluency; and the musically-trained children performed better in verbal fluency and processing speed. The musically-trained children also exhibited enhanced brain activation in the areas associated with ‘executive functioning’ compared to children who had no previous musical training.

An additional study at the National Association for Music Education as well as researchers from the University of Kansas, found that young participants in music programmes in American High Schools associated with higher GPA, SAT and ACT scores, IQ, and other standardized test scores, as well as fewer disciplinary problems, better attendance, and higher graduation rates

[1] https://www.independent.co.uk/arts-entertainment/classical/features/radio-1-dj-kissy-sell-out-classical-music-is-irrelevant-to-todays-youth-2282561.html 23/03/18

These scores can have great impacts on future quality of life as they directly contribute to which collage one is able to attend as well as future jobs leading to income.

Another important use of classical music is in modern day media. Film music is a genre that directly stems from classical music. Its widespread use in movies and television means classical music is constantly permeating our daily lives and it would be naïve to pretend it is irrelevant.

Film music serves several purposes in films including enhancing the emotional impact of scenes and inducing emotional reactions in viewers. The effects are widespread and particularly evident when watching a scene without the accompanying music. For example, watching the famous shower scene from Hitchcock’s Psycho without Herrmann’s music makes the scene appear almost comical and certainly lacks the fear and suspense the scene is meant to evoke.

The film music industry is very successful especially among younger generations, with film music scoring the highest number of downloads of instrumental music. Similarly, the videogame music industry has recently taken off in terms of popularity and recognition within the music community. Videogame music has striking similarities to both film music and elements of classical music with the main difference being it must be able to repeat indefinitely to accompany gameplay. The Royal Philharmonic Orchestra recently announced that it was to play a PlayStation concert to celebrate videogame music. James Williams, director of the RPO describes the planned concert as “signpost for where orchestral music is expanding”.

Whilst the music itself is not classical, it uses many elements of classical composition and is significantly influenced by it. It is arguably the most similar genre to music of the classical era in the modern day. This shows how it is not always obvious where derivatives of classical music can appear in the media of young people, yet the stigma is still very present. James Williams argues that if classical music rebranded to ‘orchestral music’ to include film and videogame popular music, it would help to destigmatise the term. Classical music is vital as the basis of these new and expanding genres of music that are very popular among younger generations.

Within society, there are many other instances in which classical music is used and very relevant, although not in its original context. In advertising, classical music and derivatives of classical music are widely used in order to promote specific product and target specific groups of people. A 2014 study from North Carolina State University shows how the correct musical soundtrack in an advert can “increase attention, making an ad more likely to be noticed, viewed, and understood; enhance enjoyment and emotional response; aid memorability and recall; induce positive mood; forge positive associations between brands and the music through classic conditioning; enhance key messages; influence intention and likelihood to buy”.

Within society, there are many other instances in which classical music is used and very relevant, although not in its original context. In advertising, classical music and derivatives of classical music are widely used in order to promote specific product and target specific groups of people. A 2014 study from North Carolina State University shows how the correct musical soundtrack in an advert can “increase attention, making an ad more likely to be noticed, viewed, and understood; enhance enjoyment and emotional response; aid memorability and recall; induce positive mood; forge positive associations between brands and the music through classic conditioning; enhance key messages; influence intention and likelihood to buy”.

The brain has evolved to encode emotional memories more deeply that non-emotional ones and memories formed with a relevant, resonant musical component are stored as emotional memories. This means that adverts with suitable music are more likely to be remembered and acted upon. Clearly, regardless of whether or not classical music is actively listened to by young people, it plays a very active part in our society and therefore cannot be labelled as being irrelevant.

Above: Film music for Harry Potter and the Philosopher’s Stone being performed in real time alongside the movie.

Despite classical music being stereotypically more popular within older social circles, it is still very relevant for today’s youth, whether in or out of its traditional context. Claims that the popularity of classical music is decreasing can be disproved if the definition of classical music is expanded to include similar genres such as in film, videogames and advertisement which are all very popular and relevant, in addition to the huge benefits classical music has on the cognitive development of young people. Therefore, it cannot be argued that classical music is irrelevant to today’s youth.

Further Reading:

This is your brain on music – Daniel Levitin, Dutton Penguin, 2006

https://www.gramophone.co.uk/blog/editors-blog/the-relevance-of-classical-music

https://www.theguardian.com/music/2009/apr/02/classical-music-children

Have a listen to Barber’s Adagio for Strings, and the remixes by Orbit and Tiesto, below:

Barber: https://www.youtube.com/watch?v=N3MHeNt6Yjs

Orbit: https://www.youtube.com/watch?v=VIbIHxKh9bk

Tiesto: https://www.youtube.com/watch?v=8CwIPa5VM18

Deconstruction is a theory principally put forward in around the 1970s by a French philosopher named Derrida, who was a man known for his leftist political views and apparently supremely fashionable coats. His theory essentially concerns the dismantling of our excessive loyalty to any particular idea, allowing us to see the aspects of truth that might be buried in its opposite. Derrida believed that all of our thinking was riddled with an unjustified assumption of always privileging one thing over another; critically, this privileging involves a failure to see the full merits and value of the supposedly lesser part of the equation. His thesis can be applied to many age-old questions: take men and women for example; men have systematically been privileged for centuries over women (for no sensible reason) meaning that society has often undervalued or undermined the full value of women.

Deconstruction is a theory principally put forward in around the 1970s by a French philosopher named Derrida, who was a man known for his leftist political views and apparently supremely fashionable coats. His theory essentially concerns the dismantling of our excessive loyalty to any particular idea, allowing us to see the aspects of truth that might be buried in its opposite. Derrida believed that all of our thinking was riddled with an unjustified assumption of always privileging one thing over another; critically, this privileging involves a failure to see the full merits and value of the supposedly lesser part of the equation. His thesis can be applied to many age-old questions: take men and women for example; men have systematically been privileged for centuries over women (for no sensible reason) meaning that society has often undervalued or undermined the full value of women.

It is clear that the interest and enjoyment of classical music has decreased over the years, most prominently in today’s youth, despite the increasing accessibility through platforms such as Spotify and YouTube. However, just because interest has lowered does not mean that “classical music is irrelevant to today’s youth” as radio 1 DJ ‘Kissy Sell Out’ publicly argued

It is clear that the interest and enjoyment of classical music has decreased over the years, most prominently in today’s youth, despite the increasing accessibility through platforms such as Spotify and YouTube. However, just because interest has lowered does not mean that “classical music is irrelevant to today’s youth” as radio 1 DJ ‘Kissy Sell Out’ publicly argued Within society, there are many other instances in which classical music is used and very relevant, although not in its original context. In advertising, classical music and derivatives of classical music are widely used in order to promote specific product and target specific groups of people. A 2014 study from North Carolina State University shows how the correct musical soundtrack in an advert can “increase attention, making an ad more likely to be noticed, viewed, and understood; enhance enjoyment and emotional response; aid memorability and recall; induce positive mood; forge positive associations between brands and the music through classic conditioning; enhance key messages; influence intention and likelihood to buy”.

Within society, there are many other instances in which classical music is used and very relevant, although not in its original context. In advertising, classical music and derivatives of classical music are widely used in order to promote specific product and target specific groups of people. A 2014 study from North Carolina State University shows how the correct musical soundtrack in an advert can “increase attention, making an ad more likely to be noticed, viewed, and understood; enhance enjoyment and emotional response; aid memorability and recall; induce positive mood; forge positive associations between brands and the music through classic conditioning; enhance key messages; influence intention and likelihood to buy”.

Often regarded as a cornerstone of ancient literary education, Euripides was a tragedian of classical Athens. Along with Aeschylus and Sophocles, he is one of the three ancient Greek tragedians for whom a significant number of plays have survived. Aristotle described him as “the most tragic of poets” – he focused on the inner lives and motives of his characters in a way that was previously unheard of. This was especially true in the sympathy he demonstrated to all victims of society, which included women. Euripides was undoubtedly the first playwright to place women at the centre of many of his works. However, there is much debate as to whether by doing this, Euripides can be considered to be a ‘prototype feminist’, or whether the portrayal of these women in the plays themselves undermines this completely.

Often regarded as a cornerstone of ancient literary education, Euripides was a tragedian of classical Athens. Along with Aeschylus and Sophocles, he is one of the three ancient Greek tragedians for whom a significant number of plays have survived. Aristotle described him as “the most tragic of poets” – he focused on the inner lives and motives of his characters in a way that was previously unheard of. This was especially true in the sympathy he demonstrated to all victims of society, which included women. Euripides was undoubtedly the first playwright to place women at the centre of many of his works. However, there is much debate as to whether by doing this, Euripides can be considered to be a ‘prototype feminist’, or whether the portrayal of these women in the plays themselves undermines this completely. Medea is undoubtedly a strong and powerful figure who refuses to conform to societal expectations, and through her Euripides to an extent sympathetically explores the disadvantages of being a woman in a patriarchal society. Because of this, the text has often been read as proto-feminist by modern readers. In contrast with this, Medea’s barbarian identity, and in particular her filicide, would have greatly antagonised a 5th Century Greek audience, and her savage behaviour caused many to see her as a villain.

Medea is undoubtedly a strong and powerful figure who refuses to conform to societal expectations, and through her Euripides to an extent sympathetically explores the disadvantages of being a woman in a patriarchal society. Because of this, the text has often been read as proto-feminist by modern readers. In contrast with this, Medea’s barbarian identity, and in particular her filicide, would have greatly antagonised a 5th Century Greek audience, and her savage behaviour caused many to see her as a villain. . Although this version is now lost, we know that he portrayed a shamelessly lustful Phaedra who directly propositioned Hippolytus on stage, which was strongly disliked by the Athenian audience. The surviving play, entitled simply ‘Hippolytus’, offers a much more even-handed and psychologically complex treatment of the characters: Phaedra admirably tries to quell her lust at all times. However, it could be argued that any pathos for her is lost when she unjustly condemns Hippolytus by leaving a suicide note stating that he raped her, which she does partly to preserve her own reputation, but also perhaps to take revenge for his earlier insults to her and her sex. It is debatable as to whether Euripides is trying to evoke sympathy for Phaedra and her unfortunate situation, or whether through her revenge she can ultimately be seen as a villain in the play.

. Although this version is now lost, we know that he portrayed a shamelessly lustful Phaedra who directly propositioned Hippolytus on stage, which was strongly disliked by the Athenian audience. The surviving play, entitled simply ‘Hippolytus’, offers a much more even-handed and psychologically complex treatment of the characters: Phaedra admirably tries to quell her lust at all times. However, it could be argued that any pathos for her is lost when she unjustly condemns Hippolytus by leaving a suicide note stating that he raped her, which she does partly to preserve her own reputation, but also perhaps to take revenge for his earlier insults to her and her sex. It is debatable as to whether Euripides is trying to evoke sympathy for Phaedra and her unfortunate situation, or whether through her revenge she can ultimately be seen as a villain in the play.

What is it that renders Jane Eyre and Wuthering Heights simply better novels than Twilight?

What is it that renders Jane Eyre and Wuthering Heights simply better novels than Twilight?