As some of you might have heard in assembly last week, we have been discussing 41 Questions surrounding technology (we’d love for you to have a look – feel free to contact us about your thoughts!), particularly whether technology can empower us. We think that technology can always be used for good in the right hands; as with everything, if we have good intentions these resources can be valuable for us all. But, in the same way, technology can also harm us if misused.

We can look at this on a small scale by thinking about our use of technology to either close or open our eyes to the nature around us. I remember when I was younger – when we were on a long car trip, I used to be amazed at the surrounding fields, entertaining myself by imagining the raindrops racing each other on the car window. If I’m honest, I now spend most of my car journeys staring downwards at my phone immersed into the screen. I’m not saying that technology has stopped us from appreciating natural wonders; if we use technology well, it can help us find them. However, technology does provide us with a distraction, as a tiny screen seems to answer any question and transport you virtually around the world, which seems more exciting than staring at the endless fields (which frankly all look the same) out of the car window. So that’s an example of how we can use technology positively or negatively.

Another example is our use of the ‘new’ technology such as a laptop and printer, used to write (and print) this article compared to ‘old technology’ such as a pencil and pen. If used correctly, we can maximise efficiency and quality of learning, and this was visible in the pandemic. Can you imagine the pandemic without Teams and OneNote connecting us as a school? I certainly can’t. But then again, this ‘new’ technology, such as our phones, also has ample opportunities to distract us. Even on a small scale, we are in control of whether technology is used for good or malicious purposes.

What about the technology we can’t control? How can our intentions change the impact of technology if we can’t control it? Let’s look at Artificial Intelligence, one technological development we can’t easily control. In basic terms, artificial intelligence is the technology that can ‘think’, ‘learn’, and make its own educated decisions. It does this by noticing patterns in data sets and applying these to new and often complex problems.

AI bias – the problem

The morals behind AI are commonly as a disputed topic, but we want to shed light on the bias which is deep rooted in these systems, and the real-world effect it has on us as the users, including women. Due to the lack of data surrounding marginalised groups, bias unnoticeably works its way into our programs and AI, as it is nothing more than a mirror of society. Machine learning works under the assumption that we will evaluate and use the harnessed data from the past to evaluate certain patterns, so then those patterns can be used to predict the future. If those patterns and data include these biases in the past, it will be automatically worked into future solutions.

There is no such thing as “neutral” data, it is all biased in some way!

We have four examples of this bias in AI.

- Siri is not responding to you

Over the past few years, the use of AI assistants has grown. The “wake word” (such as ‘Alexa’ or ‘Siri’) allows the AI to come alive and assist you rapidly and smoothly. But many women, including ourselves have become super frustrated when smart assistants fail to recognise our instruction with many conversations going as: “Hey Siri” … “HEY SIRI” … “HEYY SIRIII!!” with no response. However, you are not alone in this battle to set a 20-minute timer for your fish fingers, as a YouGov study revealed that 66% of female owners stated that their device often fails to respond to their voice commands compared to 54% of men. This bias has worked into our devices as the machines have been trained using predominantly male voices (that of a lower pitch) meaning that these voice assistants then struggle to understand and respond to higher pitch voices.

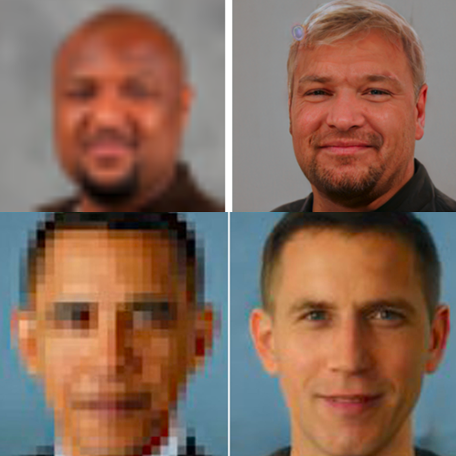

2. Resolution improvement tool

This tool was designed to turn pixelated photos into high-resolution images. There are several situations where this showed bias, such as when it turned a pixelated yet recognisable photo of Barack Obama into a high-resolution photo of a white man, highlighting how systemic racism has worked its way into these “neutral” systems.

3. Little Mix mix-up

You may have visited MSN, the Microsoft news site. Here, Microsoft decided to replace the role of a journalist with AI, a great idea of the future, right? Well, this led to a story about Jade’s personal reflections on racism being illustrated with a picture of Leigh Anne instead.

4. Twitter cropping bias

Uploading a picture of Twitter that doesn’t fit the usual dimensions leads to twitter’s AI cropping it to where it thinks a human face is present (or another focus of the image). In an experiment someone combined a picture of Barack Obama and Mitch McConnell so they were next to each other in several different combinations, but the AI always automatically cropped to Mitch McConnell for the preview. This was regardless of positioning and other potentially interfering factors, even making sure the colour of their ties was the same!

So why is this bias present? Part of the problem is that there is a lack of diversity in the data sets which we mentioned earlier. This is due to a lack of diversity in the developers of the AI. Out of Google’s AI Researchers, only 2.5% are black and only 10% are women. This means that the AI system seems to be designed to disregard the already lacking data surrounding marginalised groups as it defines it as “anomalous” based on the small number of data points available.

AI bias – the solution

A few years ago, the Algorithmic Accountability Act was introduced (in the US) which decided that big companies (classified as companies that hold information on at least 1 million people) had to be checked for bias in their data sets.

To combat the lack of diversity of the data collected, therefore causing underrepresentation of marginalised groups, we would need to collect even more data. But would you be happy for more of your data to be collected? We can’t talk on behalf of everyone, but for us – we wouldn’t mind our data being collected as long as it is used positively to help others. Resources or having access to our data isn’t a bad thing alone. It becomes dangerous when someone with bad intentions is able to use it.

Diversity within companies is also improving – a large tech giant, Intel, allocated $300 million to increase the number of women as AI professionals to 40% by 2030. Similar aims are being created for different groups in many companies.

Where we can, it is vital that we focus on using technology positively. But there are technologies that show bias out of our control, such as AI. We hope that this improves in future, but people like us need to get involved in the technology industry to support this.